前言

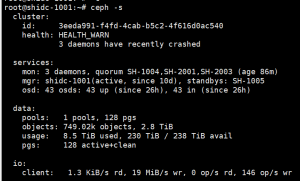

最近在折腾CEPH集群,测试环境是4台ceph物理节点,每台8~12个SATA/SAS机械硬盘,共43个OSD。性能远低于预期,目前正在调整配置中......

正好实测了一下性能,这里分享出来大家参考。简要信息通过ceph -s即可获取:

集群配置

4 CEPH节点:SH-1001 SH-1003 SH-1004 SH-1005

2Manager:SH-1001 SH-1005

3Monitor:SH-1004 SH-2001 SH-2003

开始测试

内网测速

root@SH-1005:~# iperf3 -s ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- Accepted connection from 10.1.0.1, port 42784 [ 5] local 10.1.0.5 port 5201 connected to 10.1.0.1 port 42786 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 649 MBytes 5.44 Gbits/sec [ 5] 1.00-2.00 sec 883 MBytes 7.41 Gbits/sec [ 5] 2.00-3.00 sec 689 MBytes 5.78 Gbits/sec [ 5] 3.00-4.00 sec 876 MBytes 7.35 Gbits/sec [ 5] 4.00-5.00 sec 641 MBytes 5.38 Gbits/sec [ 5] 5.00-6.00 sec 637 MBytes 5.34 Gbits/sec [ 5] 6.00-7.00 sec 883 MBytes 7.41 Gbits/sec [ 5] 7.00-8.00 sec 643 MBytes 5.40 Gbits/sec [ 5] 8.00-9.00 sec 889 MBytes 7.46 Gbits/sec [ 5] 9.00-10.00 sec 888 MBytes 7.45 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 7.50 GBytes 6.44 Gbits/sec receiver -----------------------------------------------------------

创建测速pool

root@shidc-1001:~# ceph osd pool create scbench 128 128 pool 'scbench' created

生成测试文件&测试写速度

root@shidc-1001:~# rados bench -p scbench 10 write --no-cleanup

hints = 1

Maintaining 16 concurrent writes of 4194304 bytes to objects of size 4194304 for up to 10 seconds or 0 objects

Object prefix: benchmark_data_shidc-1001_1776112

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 16 79 63 251.982 252 0.307035 0.222323

2 16 163 147 293.965 336 0.335647 0.205457

3 16 249 233 310.613 344 0.0778651 0.197094

4 16 318 302 301.948 276 0.0970851 0.206178

5 16 403 387 309.547 340 0.155605 0.202748

6 16 479 463 308.612 304 0.324706 0.203498

7 16 564 548 313.088 340 0.363865 0.202039

8 16 640 624 311.946 304 0.136735 0.202311

9 16 726 710 315.501 344 0.121806 0.200126

10 16 814 798 319.146 352 0.203508 0.199063

Total time run: 10.4176

Total writes made: 815

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 312.933

Stddev Bandwidth: 33.8257

Max bandwidth (MB/sec): 352

Min bandwidth (MB/sec): 252

Average IOPS: 78

Stddev IOPS: 8.45642

Max IOPS: 88

Min IOPS: 63

Average Latency(s): 0.203392

Stddev Latency(s): 0.0820546

Max latency(s): 0.566828

Min latency(s): 0.0726562

测试顺序读

root@shidc-1001:~# rados bench -p scbench 10 seq

hints = 1

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 16 178 162 647.759 648 0.0526692 0.0907286

2 16 331 315 629.819 612 0.0749324 0.0979026

3 15 505 490 652.898 700 0.0627999 0.0953559

4 15 677 662 661.631 688 0.12783 0.0942633

Total time run: 4.94436

Total reads made: 815

Read size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 659.337

Average IOPS: 164

Stddev IOPS: 10.0167

Max IOPS: 175

Min IOPS: 153

Average Latency(s): 0.0951642

Max latency(s): 0.537939

Min latency(s): 0.0263158

测试随机读

root@shidc-1001:~# rados bench -p scbench 10 rand

hints = 1

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 16 217 201 803.739 804 0.412755 0.0688957

2 16 388 372 743.811 684 0.0673436 0.0817843

3 16 595 579 771.824 828 0.118287 0.0801714

4 15 807 792 791.827 852 0.0863314 0.0786471

5 16 1024 1008 806.23 864 0.0154356 0.0763434

6 16 1247 1231 820.494 892 0.0249661 0.0758687

7 15 1495 1480 845.535 996 0.052225 0.0739281

8 16 1682 1666 832.822 744 0.00785624 0.0747323

9 16 1891 1875 833.156 836 0.118143 0.0743894

10 16 2157 2141 856.212 1064 0.069138 0.0729575

Total time run: 10.1116

Total reads made: 2158

Read size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 853.676

Average IOPS: 213

Stddev IOPS: 27.6705

Max IOPS: 266

Min IOPS: 171

Average Latency(s): 0.0734673

Max latency(s): 0.461495

Min latency(s): 0.00386068

清理测试文件

root@shidc-1001:~# rados -p scbench cleanup Removed 815 objects